Machine Learning Strategy (Part 2)

This is the second blog post about machine learning strategy. It is about human-level performance, bias and variance (tradeoff) and how to improve your algorithm iteratively.

Human-Level Performance

- Human-Level Performance is the performance that can be achieved by human, by e.g. looking at the data.

- An algorithm should at least reach human-level performance; If not, there is potential for improvement

- Improvement can be achieved by e.g.

- Generating more training data (e.g. using humans)

- A manual analysis of the observations on which the algorithm performs bad

- An analysis of the bias-variance tradeoff (see later)

- The data could be structured in different ways for humans and computers; whereas humans can read in the information of a picture with the eyes, for computers this information has to be transformed to numbers.

- The Bayes Error is defined as the best performance that could be achieved by an algorithm for the task; It should theoretically always be below the human-level performance

Bias and Variance

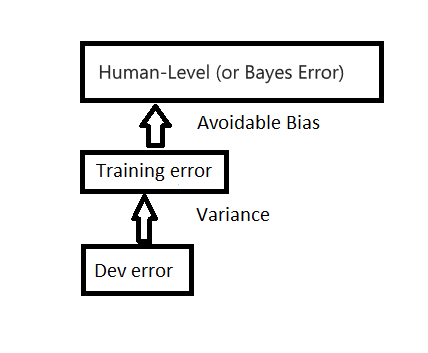

There is a tradeoff between bias and variance, while learning machine learning algorithms. I will differentiate here between avoidable bias and variance:

- Avoidable bias: Difference in performance between training data and Bayes error (=human-level performance)

- Variance: Difference in performance between training data and development data.

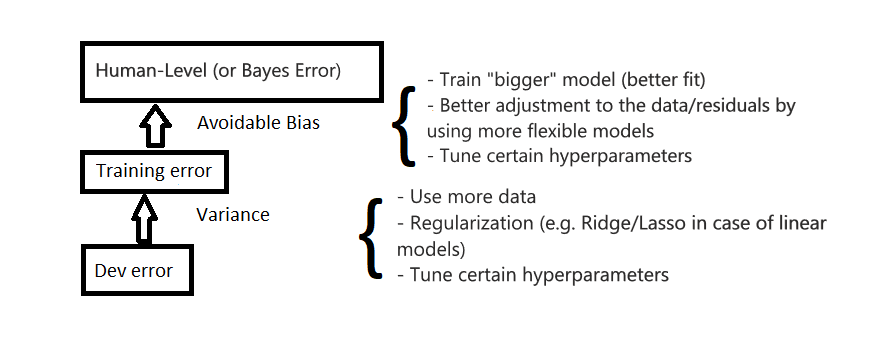

Possible improvements:

Notes:

- Avoidable bias should be small, but not too small (problem of overfitting)

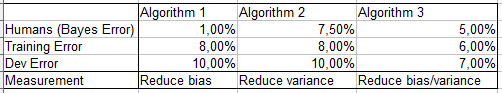

- Depending on performance of human, training and dev error either bias or variance reduction should be targeted

- If algorithmic dev set performance is better than human-level performance there is no clear indicator if bias or variance should be reduced

Examples:

Error analysis

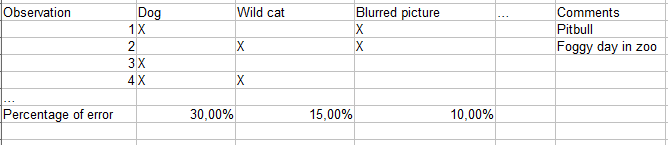

Following things can be done in the error analysis:

- Get observations which cause problems (missclassification, big deviation)

- Which is the cause for this?

- Create table with percentage error of the cause compared to whole error

- Obtain the main causes which are responsible for the worse performance

Example for image classification with cats:

Concentration on problems that cause the lion part of the worse performance

- Can the problems be solved by adjusting the algorithm? (new features, other hyperparameters, other algorithms)

- Can the algorithm be improved by removing certain observations from the dataset? (e.g. outliers, wrongly classified observations, etc.)

General advices:

- Set up train/dev/test data set

- Build the first algorithm quickly (on train)

- Execute a bias-variance and error analysis to detect the main problems (on train/dev)

- Make improvements in the data set/algorithm and analyse the resulting algorithm again (on train/dev)

- Do the final evaluation on test

→ „Build your first system quickly, then iterate to improve it“

→ In most cases data scientists build too complex procedures/algorithms than too simple ones, so think about the complexity of the machine learning system that you have built.

Next blog post

In the next blog post I will talk about what to do with different distribution in the train/dev/test set, how to learn with multiple tasks and advantages and disadvantages of end-to-end learning.

This blog post is partly based on information that is contained in a tutorial about deep learning on coursera.org that I took recently. Hence, a lot of credit for this post goes to Andrew Ng that held this tutorial.